“Don’t explain computers to laymen. Simpler to explain sex to a virgin.”

So wrote the venerable Robert A. Heinlein in The Moon is a Harsh Mistress (1966).

I suspect I’m not alone in trying to understand exactly what it is that artificial intelligence is capable of generally, how it would approach writing a Stargate scene specifically, and just how impossible hard it’s going to be realizing Brad Wright’s dream of a brand new Stargate script for his stars to perform.

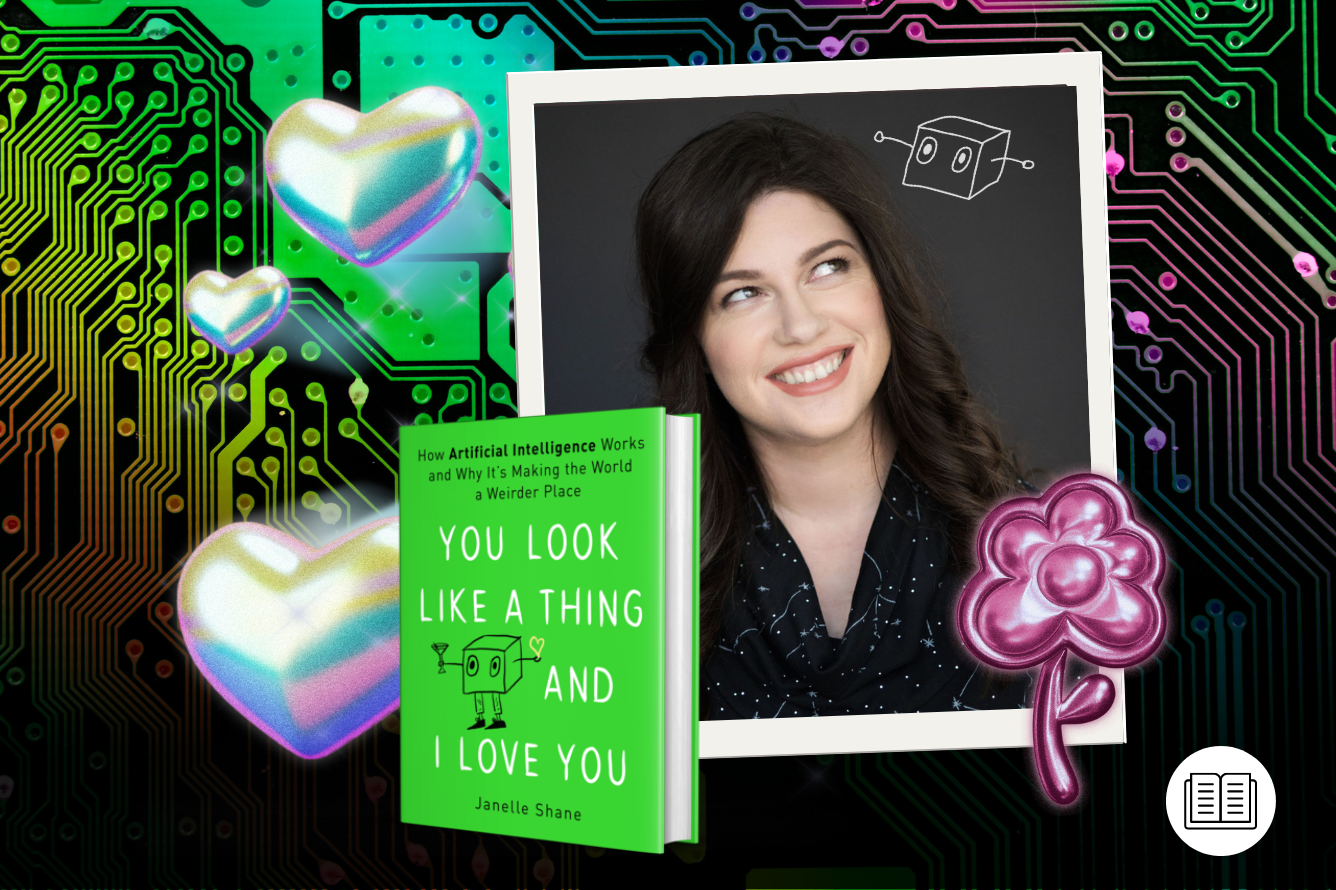

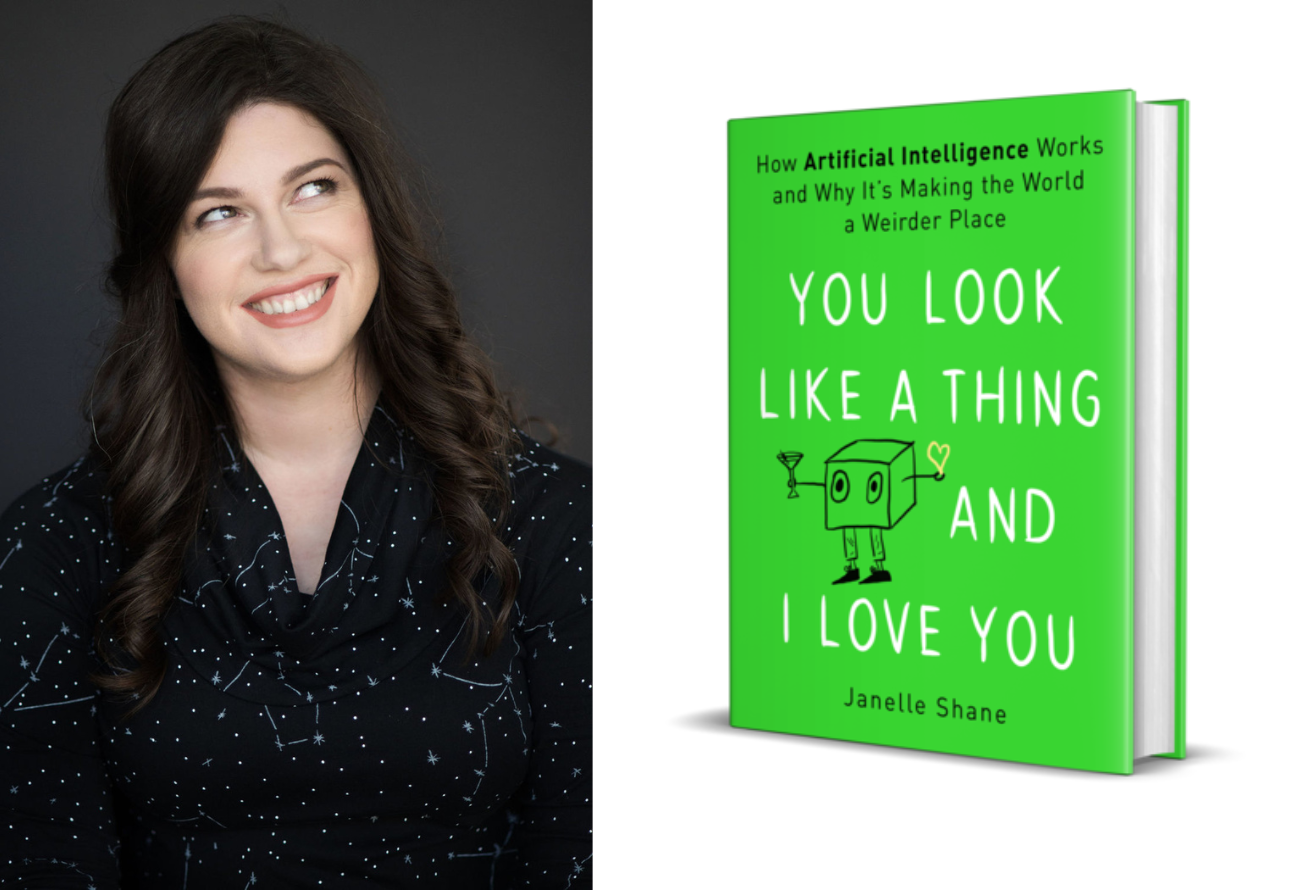

“The most basic difference between A.I. and the kinds of computer programs that we’ve been using for longer is that A.I. does not follow specific instructions on how to solve a problem,” explains Janelle Shane, research scientist, custodian of the fascinating aiweirdness.com, and author of You Look Like a Thing and I Love You: How AI Works and Why It’s Making the World a Weirder Place (2019).

“Instead, you give it the goal and it comes up with an approach to solving this problem. And you know, you may not even be able to tell exactly what its approach is, which can be useful if it’s a problem that you don’t know how to solve.

“If you don’t know how to write down specific instructions for ‘What does a bird look like?’ The beak, okay, well, what does the beak look like? How do you differentiate a beak from anything else that it’s like pointy, like almonds or something? And so the nice thing about working with A.I. for some of these applications is that you give it the goal – these are birds, these are not birds – and it can figure out these internal rules that help it do the tasks.”

Machine Learning A.I. Explained

This machine learning process lurks behind a number of apps or devices we use on daily basis, most often focused on learning our habits to improve our customer experience like sycophantic pocket butlers (Siri, Alexa, Nest), or to flog us a load of unnecessary tat that matches the idle whimsy of our browsing history (Amazon, Facebook, eBay).

Netflix is a pretty good example of this that we’re all likely to have encountered, not only putting together ever more nuanced recommendations of things you might want to watch based on your habits but tweaking how it presents those recommendations to emphasize different elements. If you watch a ton of ‘80s comedies, you’ll get a goofy thumbnail for Stranger Things showing the lads in their Ghostbusters get-up. Watch a lot of superhero shows, you might get an intense Eleven with a nosebleed and Professor-X-style telekinesis hands. It’s smart, but it’s ultimately a simple task only made challenging by the sheer volume of users and their data to wrestle with.

“These are the types of nuanced problems that we have a lot of data for, but we don’t have a good description that we can write for them. So one of the first commercial applications with A.I. was in language translation. Google Translate was able to figure out how to do this language stuff without a human having to sit down and write a detailed [decision] tree of how to do it. But then we also have A.I. doing really well in playing games. You may have heard of A.I. players beating humans in chess and go and certain video games.

“The thing about tasks like games, is that they tend to be really, really narrow tasks. That turns out to be kind of the differentiator between where A.I. is going to behave pretty seamlessly, and where it’s going to struggle and start to show mistakes.

“The more narrow trunk of a world that you can carve off for it to work with, the better.”

Can an A.I. Write a Screenplay?

Whilst it’s an open secret in the industry that Riverdale is written entirely by predicted text, having artificial intelligence create an original Stargate script is far more challenging. A.I. doesn’t just conjure something from nothing, they need a diet of examples to remix.

“Like in other A.I. examples, you have to give it the goal first,” says Shane. “And in this case, when you’re trying to generate text, usually, the goal you give it is ‘Here is a bunch of texts that we have from somewhere, try to predict which letters or which words come next in the sequence’. If you’ve given it a bunch of scripts as training data, then it may be fairly good at figuring out what comes after that in the script. If you give it cooking recipes, instead, as training data, it will definitely start like adding vanilla and sauteing everything.”

For our Stargate A.I. project, coherence – or perhaps the illusion of coherence – is the Holy Grail. A.I. has a reputation for generating a surreal word salad and the title of Shane’s book (You Look Like a Thing and I Love You) is inspired by one of a series of perplexing chat-up lines she had an A.I. generate. Without explaining the rules of innuendo or suggestion, the poor thing had no real comprehension of what made a chat-up line a chat-up line.

“The idea is you don’t manually teach it anything, except by giving it lots of examples where the words ‘Oh, hello, how are you?’ are followed by another character saying, ‘Oh, I’m doing all right,’ or something like this. There are these kinds of formulaic things that happen all the time, it tends to pick up more quickly.

“Then try to teach it more nuanced things like ‘No, this character is always angry’, or ‘This character is always uptight’. If there are enough examples of this character being angry or uptight, then the A.I. tends to start learning the words and phrases that go along with that, but you have to have a pretty sophisticated state-of-the-art A.I. to handle that at this point.”

This is effectively what predicted text or chatbots do. They calculate the most likely next word in the sentence or the most likely response to a question, but there’s no real understanding of what is actually important about a sentence or story. That’s why you get things like Corridor Crew’s bizarre Avatar 2 script.

Obviously, we don’t want that, we’re here to celebrate Stargate, not drag it through a river of open sewage.